Hi all

In regards to some recent Docker discussions, I thought a thread to summarize the efforts and discussions related to this would be a good next step. Below is a starting point of some topics to iron out and I invite everyone to participate and give input.

Topics

CLI

- Command to setup and generate configuration(s)

-

yarn redwood setup deploy docker: init the template files- options? E.g. kubernetes, etc.

-

yarn redwood deploy docker: I don’t think this makes sense. The recommendation might be for projects to create their own custom package.json script for deployment.- @jeliasson answer: Agreed.

- […]

Development

- Is there requests or audience for local docker development?

- @thedavid answer: yes, there have been. I think it would be a great addition and use-case for Docker Compose. Using file mounting could work seamlessly.

- Database choice (SQLlite, MySQL/MariaDB, Postgres, …)

- How will

yarn rwt ...work? - Link together development in a

docker-compose.yaml - […]

Production

Question:

Are there circumstances requiring the option to either separate Web and API into two services or just one?

- best practices would suggest unique services

- however, given

yarn redwood serverunning both the API server and serving the static Web files, I can forsee people wanting to “just get started prototyping” with a single service.-

@jeliasson answer: “just get started prototyping” feels more of a development-phase rather than production. For production I think it makes sense to abstract the services and make sure to use a web server that is more up to the task of serving static contents and the rules that comes with that. I’m not familiar with the web server being used with

yarn redwood serve, but I guess it comes down to some benchmarks. In the end; Production should be fast and flexible.

-

@jeliasson answer: “just get started prototyping” feels more of a development-phase rather than production. For production I think it makes sense to abstract the services and make sure to use a web server that is more up to the task of serving static contents and the rules that comes with that. I’m not familiar with the web server being used with

API

- Make a slim production image (Dockerize api-side)

- Figure out where and how database migration and seed would occur (see this comment for context)

- […]

Web

- Web server choice (Nginx vs Caddy vs …)

-

@thedavidprice answer: why not

yarn redwood serve web? My preference would be to keep the service minimal. If you want to also use Nginx, etc., that could be an additional implementation per hosting/infra. I just think there’s going to be an infinite amount of hosting options and alternatives for performance and caching, but all very specific to the host/infra being used. - @jeliasson answer: As Redwood is opinionated, I think it makes sense to choose a web server that does the best job. If that is the “built in one”, so be it. If not, we should aim for something that is more suitable and has been battle tested. As we’re talking about Docker, the hosting platform should not make any difference and it will (should) run exactly the same on all hosting platforms. Everything else is just optional configuration, and preferably with something that has great documentation for that.

-

@thedavidprice answer: why not

- Baseline configuration (Cache, CORS, etc)

- […]

Other

- Overall file structure and naming of Dockerfiles (production vs development files?).

- Is earhly just another dependency or something that would make sense somehow?

- Should we maintain various CI-CD pipeline examples for deployments? (GitHub Actions, GitLabs, …)

- I think these would make for a good Cookbook and/or Docs.

- @jeliasson answer: Agreed.

- I think these would make for a good Cookbook and/or Docs.

- …

Benchmarks

See redwoodjs-docker repository.

References

- Repository: redwoodjs-docker

- Issue: Dockerize api

- Inspiration: Self-hosting RedwoodJS on Kubernetes

- Inspiration: Running in Docker

- Inspiration: Docker on Lambda or Lambda on Docker?

- Inspiration: Getting OG and meta tags working with nginx, pre-render.io and docker

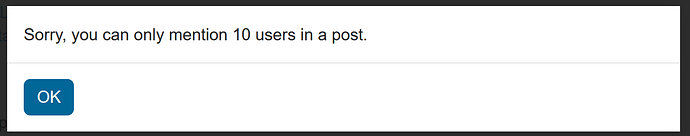

Edit Strange. I could not save my edits without getting this error message. @thedavid Do you know why? In the meantime, I moved the /cc below to a code block.

/cc @ajcwebdev @benkraus @danny @peterp @pi0neerpat @pickettd @nerdstep @wiezmankimchi