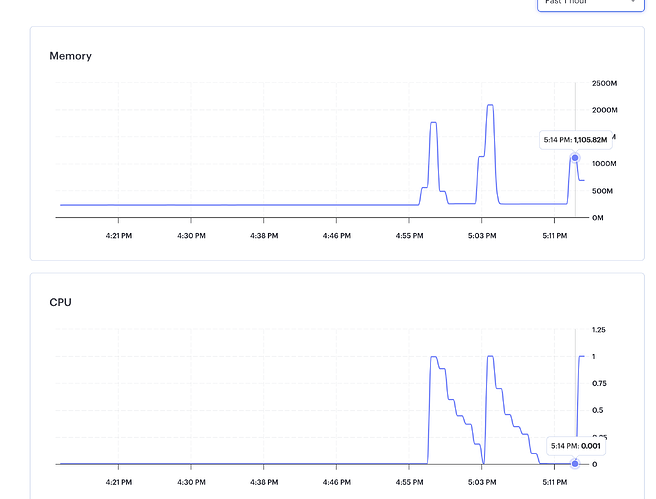

Quick update: we have been spending time on this in response to all the community feedback. We’ve been developing some tooling to help measure what exactly is happening and why the memory usage is so high. We’ll be able to start exploring changes we can make to move the memory usage down and we will keep this thread updated as we make process on this.

@Josh-Walker-GM and I identified one change to yarn rw build that had a noticeable reduction in memory usage locally.

While this is only one command, and it’s only a build time change (not a runtime one), it’s a start, and we’ll keep chipping away at it. It’s available in the latest RC if anyone has the chance to try it, feedback would be appreciated:

Josh and I will most likely focus on distributing the tooling we built to measure memory usage and the number of processes spawned so that we can be sure that users machines behave more or less like ours.

Ran into this for the first time today in prod, not during deploy but during runtime. We’re on 6.3.3. Similar log messages and stacktrace to @deep-randhawa above.

I ran some heap profiles from VSCode locally with yarn rw dev but couldn’t seem to reproduce.

I’m not sure whether I tested this the right way, but I was still not able to deploy to Render without an out of memory issue.

What I did was:

Update my project to 6.5.0

Configure to deploy to Render

Pushed the change

As expected there was an out of memory failure during deployment

Then I updated to 6.5.1-rc.0 and redeployed.

I still got the out of memory error during the deployment.

If there is any data that would help to pinpoint the issue then I can try to find that, if anyone can tell me where to look

One thing I did notice was that my schema on a fresh database was in place, which suggested that the Prisma migrations ran.

Wanted to add that the Node.js core team recently identified that Node.js v18 has a regression affecting memory usage. While Redwood is built for Node.js 18, we don’t use any of Node’s more experimental features like loaders, so I imagine Redwood would keep working on 20 (save the Prisma issue we just found on Netlify that we’re patching today). I could see some bugs coming up related to polyfills on 16, but can’t be sure yet. Has anyone tried changing the Node.js version to 16 or 20?

trying now!

Changed NODE_VERSION to 20 in render.yaml and have managed to deploy successfully on a render free tier. Thanks!

Confirming that I had a significant memory reduction from upgrading to version 20 as well. Still a lot, but about half of what it was before.

Render doesn’t show you memory usage on the free plan, but so far after switching to Node 20 my service has not crashed!

Thanks @dom and @Josh-Walker-GM ![]()

That said, I have an older project that I have been holding off upgrading that’s currently on Node 18 on RW v5.3, and it’s pretty stable at ~400mb memory usage.

So it does seem that switching from 18 to 20 does work for reducing memory load, but that there’s still something going on with the dependencies between RW 5 & 6.

I spoke too soon, my service is still going OOM ![]()

It’s definitely better on Node 20, as on Node 18 it was pretty much guaranteed that any API call would cause it to go above 512mb, but it’s still prone to happening on Node 20.

Adding to thread:

Perhaps TS related?

As Redwood v6.5.0 now upgrades typescript to v5.3.2, the latest typescript releases appear to be susceptible to memory errors on types that can recurse infinitely.

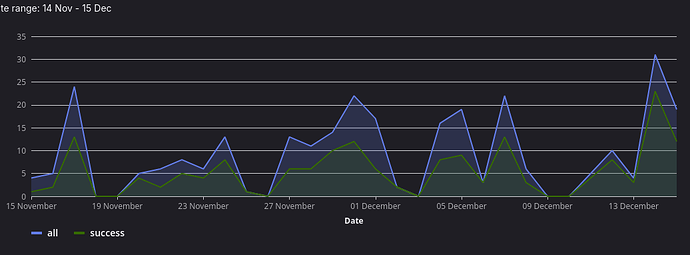

Right now it at least seems as if our test suite runs through more consistently. Upgraded to 6.5.1 on the 7th

(yes not all failures are timeout related, still)

This is still a problem. Officially Redwood uses node 18.x, but setting that as the node version seems to prevent Render deploys from working correctly…

Update: v7 will default to Node v20.